Prerequisites

- 数据结构

- 线性代数

- 概率论与数理统计

- 高等数学(微积分、泰勒展开、解析几何、拉格朗日乘数法)

Rules

学习基础原理,做驾驭机器学习技术的人,而非被眼花缭乱的机器学习技术所束缚。

Roadmap

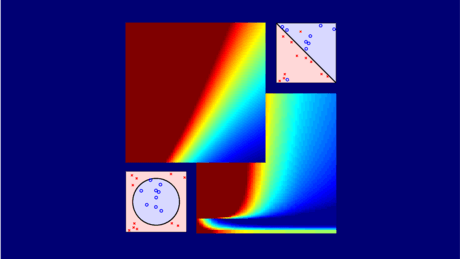

When Can Machines Learn?

- The Learning Problem: takes and to get g;

- Learning to Answer Yes or No: PLA takes linear separable and perceptrons to get hypothesis g;

- Types of Learning: Binary classification or regression from a batch of supervised data with concrete features;

- Feasibility of Learning: Learning is PAC-possible if enough statistical data and finite ;

Why Can Machines Learn?

- Training versus Testing: effective price of choice in training: growth function with a break point;

- Theory of Generalization: possible if breaks somewhere and N large enough;

- The VC Dimension: learning happens in finite , large , and low ;

- Noise and Error: learning can happen with target distribution and low with respect to ;

How Can Machines Learn?

- Linear Regression: analytic solution with linear regression hypotheses and squared error;

- Logistic Regression: gradient descent on cross-entropy error to get good logistic hypothesis;

- Linear Models for Classification: binary classification via (logistic) regression; multiclass via OVA/OVO decomposition;

- Nonlinear Transformation: nonlinear via nonlinear feature transform plus linear with price of model complexity;

How Can Machines Learn Better?

- Hazard of Overfitting: overfitting happens with excessive power, stochastic/deterministic noise, and limited data;

- Regularization: minimizes augmented error, where the added regularizer effectively limits model complexity;

- Validation: (crossly) reserve validation data to simulate testing procedure for model selection;

- Three Learning Principles: Occam’s Razor, Sampling Bias and Data Snooping.

Postscript

如果在阅读笔记时,看到一些问题、或与我有不同的观点和思考,欢迎来信交流: [email protected]

另外,关于《机器学习技法》系列的笔记,正在更新中:ML-Techniques-Index