Prerequisites

- 数据结构

- Python

- 机器学习基石

Rules

从《基石》中的原理出发,深入一些复杂的机器学习模型。

Roadmap

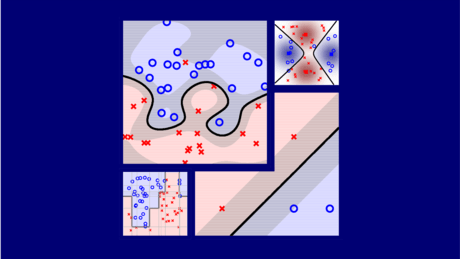

这门课中一个关键就是特征转换,处理特征转换有三个关键技巧,并各自代表了三个重要模型:

Embedding Numerous Features: Kernel Models

How to exploit and regularize numerous features?

- Linear Support Vector Machine: linear SVM: more robust and solvable with quadratic programming;

- Dual Support Vector Machine: dual SVM: another QP with valuable geometric messages and almost no dependence on ;

- Kernel Support Vector Machine: kernel as a shortcut to (transform + inner product) to remove dependence on : allowing a spectrum of simple (linear) models to infinite dimensional (Gaussian) ones with margin control;

- Soft-Margin Support Vector Machine: allow some margin violations while penalizing them by ;equivalent to upper-bounding by ;

- Kernel Logistic Regression: two-level learning for SVM-like sparse model for soft classification, or using representer theorem with regularized logistic error for dense model;

- Support Vector Regression:

Combining Predictive Features: Aggregation Models

How to construct and blend predictive features?

- Blending and Bagging: blending known diverse hypotheses uniformly, linearly, or even non-linearly; obtaining diverse hypotheses from bootstrapped data;

- Adaptive Boosting:

Distilling Implicit Features: Extraction Models

How to identify and learn implicit features?